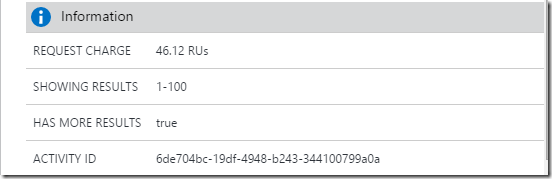

I am working on one of these IoT projects at the moment and one of my requirements is to stream events from devices into DocumentDB. My simplified architecture looks like this.

Event Hub to Worker Role to DocumentDB

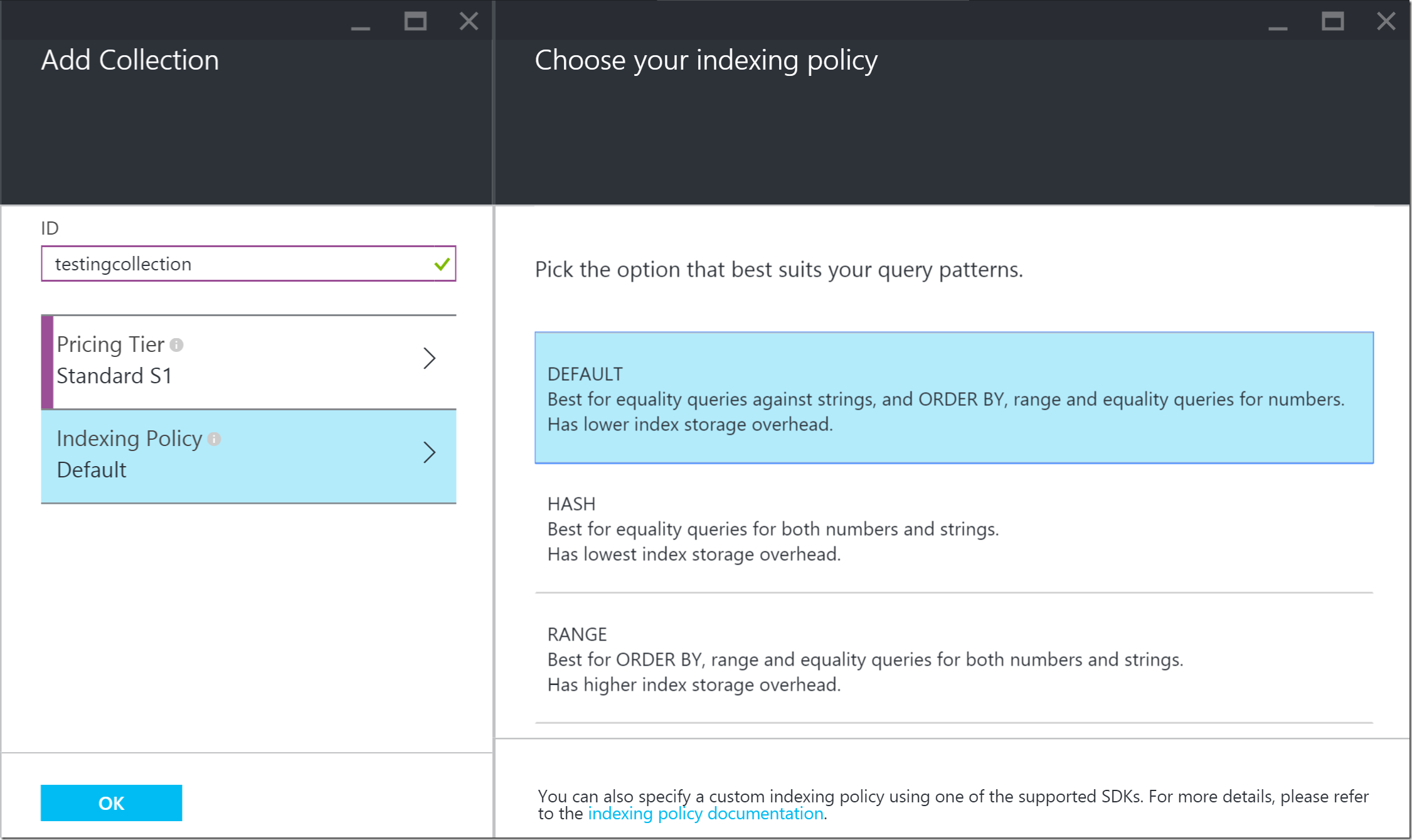

Each collection in DocumentDB is provisioned with a certain amount of throughput (RU == Request Units). If you exceed that amount of requests you will receive back a 429 error “Too Many Request“. To get around this you can

- Move your collection to a more performant level (S1, S2, S3)

- Implement throttling retries

- Tune the requests to need less RUs

- Implement Partitions

#1 makes sense because if you are hitting DocumentDB that hard then you need to get the right “SKU” to tackle the job (but what if S3 is not enough)

#2 means capture the fact you hit this error and retry (I’ll show an example)

#3 in an IoT scenario could be taken to mean “Slow down the sensors”, but also includes indexing strategy (change to lazy indexing and/or excluding paths)

#4 IMHO the best and most logical option. (allows you to scale out. Brilliant)

Scenario

My scenario is that I want to take the cheapest option so even though I think #4 is the right option, it will cost me money for each collection. I want to take a look at #2. I want to show you what I think is the long way around and then show you the “Auto retry” option.

The Verbose Route

public async Task SaveToDocDb(string uri, string key, dynamic jsonDocToSave)

{

using (var client = new DocumentClient(new Uri(uri), key))

{

var queryDone = false;

while (!queryDone)

{

try

{

await client.CreateDocumentAsync(coll.SelfLink, jsonDocToSave);

queryDone = true;

}

catch (DocumentClientException documentClientException)

{

var statusCode = (int)documentClientException.StatusCode;

if (statusCode == 429)

Thread.Sleep(documentClientException.RetryAfter);

//add other error codes to trap here e.g. 503 - Service Unavailable

else

throw;

}

catch (AggregateException aggregateException) when (aggregateException is DocumentClientException )

{

var statusCode = (int)aggregateException.StatusCode;

if (statusCode == 429)

Thread.Sleep(aggregateException.RetryAfter);

//add other error codes to trap here e.g. 503 - Service Unavailable

else

throw;

}

}

}

}

}

The above is a very common pattern TRY…CATCH, get the error number and take action. I wanted something less verbose and would have automatic retry logic that made sense.

The Less Verbose Route

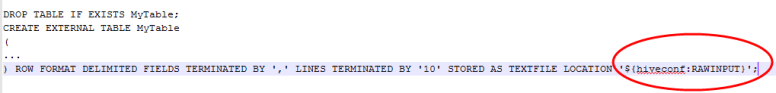

To do it I had to add another package to my project. In the Nuget Package Manager Console

Install-Package Microsoft.Azure.DocumentDB.TransientFaultHandling

**

This is not a DocumentDB team library and it is unclear as to whether it is still being maintained. What is clear is that the DocumentDB team will be bringing this retry logic into the SDK natively. This will mean a lighter more consistent experience and no reliance on an external library.

**

Now add some using statements to the project

using Microsoft.Azure.Documents.Client.TransientFaultHandling; using Microsoft.Azure.Documents.Client.TransientFaultHandling.Strategies; using Microsoft.Practices.EnterpriseLibrary.TransientFaultHandling;

And now instead of instantiating a regular DocumentClient in your application you can do this.

private IReliableReadWriteDocumentClient CreateClient(string uri, string key)

{

ConnectionPolicy policy = new ConnectionPolicy()

{

ConnectionMode = ConnectionMode.Direct,

ConnectionProtocol = Protocol.Tcp

};

var documentClient = new DocumentClient(new Uri(uri), key,policy);

var documentRetryStrategy = new DocumentDbRetryStrategy(RetryStrategy.DefaultExponential) { FastFirstRetry = true };

return documentClient.AsReliable(documentRetryStrategy);

}

Summary

You may never hit the upper ends of the Request Units in your collection but in an IoT scenario like this or doing large scans over a huge collection of documents you may hit this error and you need to know how to deal with it. This article has provided you with two ways to handle this need. Enjoy

My thanks go to Ryan Crawcour of the DocumentDB team for proof reading and sanity checking